A High-Throughput and Low-Cost Hardware Implementation on Image Haze Removal¶

paper¶

Qing Wang, Leilei Huang, Xiaoyang Zeng, Yibo Fan, "A High-Throughput and Low-cost Hardware Implementation of Image Haze Removal", has been submitted to IEEE International Symposium on Circuits and Systems(ISCAS 2016). 4 pages.

research motivation¶

Image captured in bad weather often suffers the loss of visibility due to the existence of fog or haze in the atmosphere. This is because the light reflected from target objects will get attenuated through the haze. What we get from our camera is a mixture includes original colors of objects and degradation effects of haze. Which is, obviously, inconvenient for our subsequent steps of image processing and computer vision.

overview¶

Many effective and efficient methods or algorithms have been proposed on image haze removal. However, considering their complexity, it is difficult to realize low-cost image haze removal on hardware. In this paper, we propose an optimized and efficient algorithm for hardware implementation of image haze removal. We have implemented our design on Altera FPGA platform, and it actually shows pleasant results. Synthesis under TSMC-65nm library shows that it can achieve 500M frequency with a low power consumption of 13.1mW. When applied to video haze removal, our proposed design can achieve extremely high throughput with pipeline architecture. Thus our method is suitable for low-cost and high-performance hardware implementation of image haze removal.

model of hazy image¶

In computer vision or digital image processing, the model explaining the formation of hazy image is:

p. In this model, J stands for the original radiance of objects, while the radiance suffers attenuation when it goes through fog or haze, thus we multiply J with transmission map t. t shows the proportion of radiance that reaches camera without the effect of haze, thus the value of t is obviously in [0,1]. A is atmospheric light, making up for another component of hazy image. The goal of image dehazing is to estimate airlight A and transmission map t. Then the restored image can be calculated as:

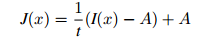

simple schematic of proposed hardware design¶

In our hardware architecture, ATMOS module is responsible for estimating atmospheric light, GRAY module transfers original RGB image into gray image. OPMAT module scans gray image patch by patch, and produces the optimal t for every patch. To avoid block artifacts, we use mean filter in MEAN module to refine t produced in OPMAT module. RECOVERY module receives optimal t from MEAN module and optimal A from ATMOS module, then produces restored color image.

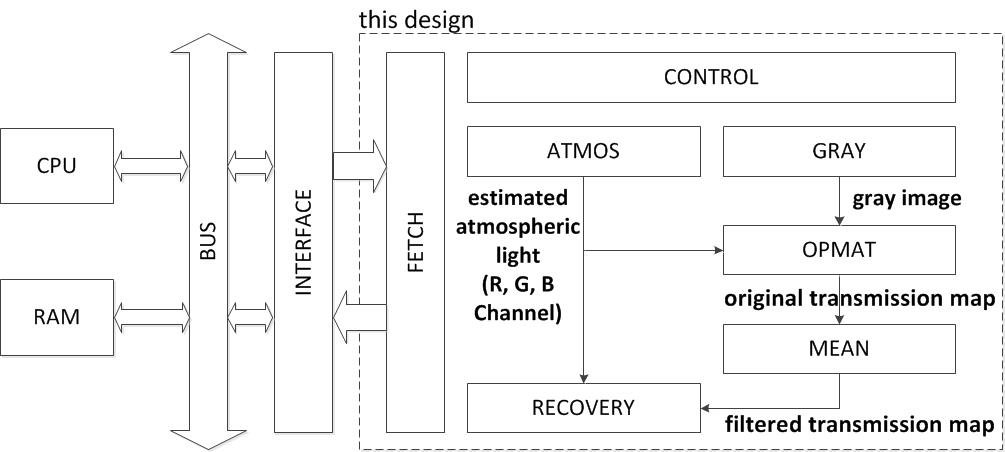

pipeline work flow of video dehazing¶

When applied to video haze removal, our proposed method can be separated into several steps thus achieve a pipeline work flow to save execution time, as shown in Fig.5 and Fig.6. In Fig.6, N means the Nth frame of image, while P, Q and etc. means a 16*16 patch. ATMOS and GRAY module should always works on the next frame of the other three module, since the other three module need the value of atmospheric light of current frame. When OPMAT module works out the result t of patch Q+1, MEAN combines its value of previous patch P, P+1 and Q. Thus the whole values of patch P are filtered and refined. Which means that RECOVERY module can produce restored results of patch P.

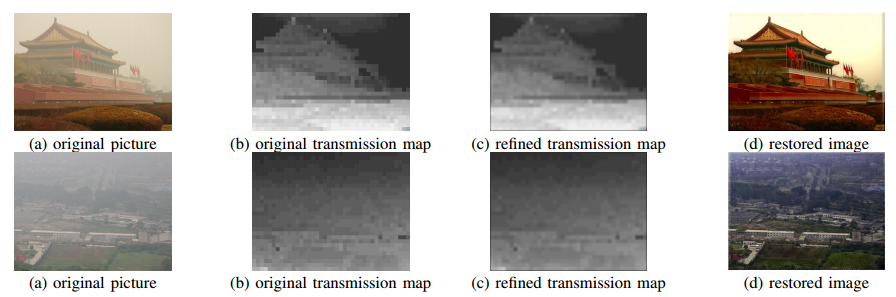

experimental results¶

To verify the low complexity of computation and high feasibility of implementation on hardware of our method, we use verilog HDL language to build an image dehazing project, and implemented it on Altera FPGA. Fig.4 shows the processing output and final output of our method on FPGA platform. The right margin of restored image is gray because the mean filter has abandoned the most right part of original transmission map while doing refinement.

basic analysis of proposed design¶

| cycles(256pixels) | 100 |

| working frequency:200MHz | |

| power consumption | 5.88mW |

| throughput | 512Mpixel/s |

| gate counts | 27.1K |

| working frequency:500MHz | |

| power consumption | 13.1mW |

| throughput | 1.3Gpixel/s |

| gate counts | 29.1K |

The timing performance can be estimated in the following way. In order to process a 16*16 patch, ATMOS and GRAY module work parallel and need 96 cycles to process all RGB channel. OPMAT module and MEAN module needs 26 cycles and 100 cycles to produce original and refined transmission map. RECOVERY module needs 64 cycles to restore image. Therefore, with a pipeline work flow shown in Fig.6, our proposed hardware implementation needs 100 cycles to process 16*16 pixels, and 810000 cycles to process a 1920*1080 size image. In other words, our design can realize a real-time video haze removal of 1920*1080@30fps when works at only 27MHz.